Dear Prof. Jan Schnupp,

I really appreciate your very timely response, and I apologize for my delayed reply.

I would like to clarify my current research activities and explain why I am exploring the use of ANF and MSO firing rates for localization tasks. Perhaps I did not articulate my intentions clearly in my previous email.

I I am developing a localization model which is applicable for both normal hearing and hearing impaired. So I choose to use Zilany's model instead of using a gammatone filter. From this model I can get ANF firing rate.

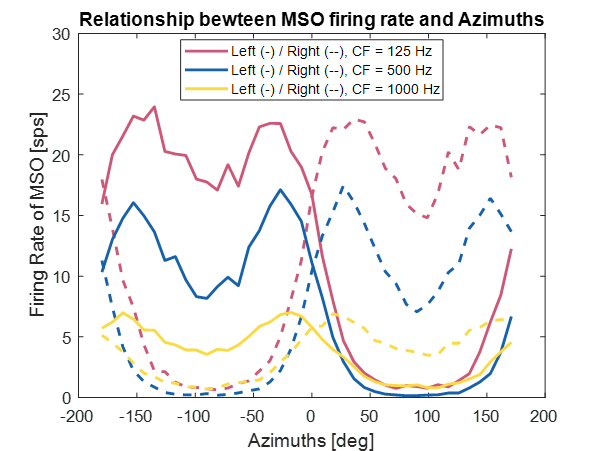

Furthermore, I am employing another model to simulate the MSO, using HRTFs convolved with a sine tone as input. This model reveals a nonlinear relationship between azimuth/elevation angles and the averaged MSO firing rates for each characteristic frequency,

as illustrated below. Using regression methods, I attempt to predict the angles from the MSO firing rates. However, this approach presents a notable challenge: the front-back confusion is evident. To address this, I am exploring alternative cues or utilizing

the ANF firing rate. Unfortunately, leveraging ANF firing rate information across multiple frequencies has not yielded success thus far.

The MSO model I am using, detailed in https://doi.org/10.3389/fnins.2018.00140, predicts ITD from the MSO, and I am considering applying a similar method to predict azimuths.

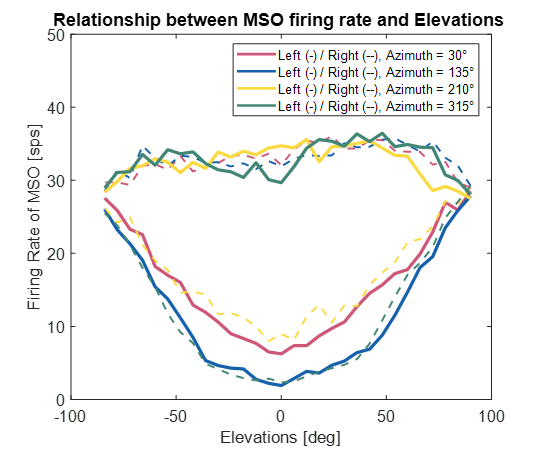

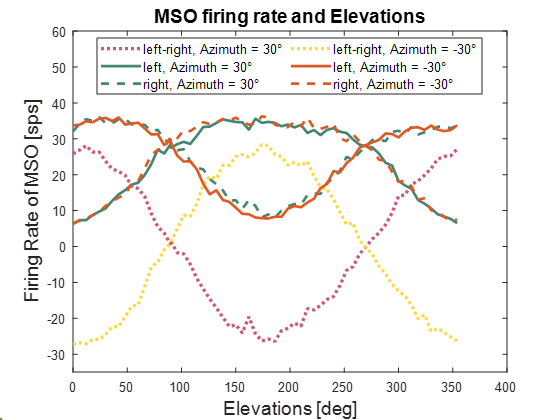

Yes, I also have this up-down confusion when I tried to predict the elevation in range of -90-270, as shown below.

Could you possibly suggest any references or strategies to help resolve these confusions by incorporating the shape of these confusion cones?

Thanks in advance,

Warm regards,

Qin

Sent: Wednesday, 26 February 2025 07:26:43

To: Qin Liu

Cc: AUDITORY@xxxxxxxxxxxxxxx

Subject: Re: [AUDITORY] Seeking advice on using ANF firing rate to reslove front-back confusion in sound localization model

Dear auditory list,

I am currently working on a project involving sound localization using firing rates from auditory nerve fibers (ANFs) and the medial superior olive (MSO). However, I have encountered an issue: I am unable to distinguish between front and back sound sources using MSO firing rates alone but only the left-right.

I am considering whether auditory nerve fiber (ANF) firing rates might provide a solution, but I am uncertain how to utilize them effectively. For instance, I have experimented with analyzing the positive gradients of ANF firing rates but have not yet achieved meaningful results.

Could anyone suggest an auditory metric derived from binaural signals, ANF firing rates, or MSO that could classify front/back sources without relying on HRTF template matching? Any insights or alternative approaches would be invaluable to my work.

Thank you in advance. I sincerely appreciate any guidance you can offer.

Best regards,

Qin Liu

Doctoral Student

Laboratory of Wave Engineering, École Polytechnique Fédérale de Lausanne (EPFL)

Email: qin.liu@xxxxxxx