Hi Ian,

The detectSpeech function does in general have aggressive boundaries to the speech region. It’s a simple algorithm that uses frame-based energy and spectral spread thresholding

and there is no logic to hold-over or extend decisions except between regions. This means it does especially poorly for speech regions that begin or end with unvoiced speech. In some of our machine learning examples, we’ve found we get better results when

we manually extend the roi as a postprocessing step. The

extendsigroi function might be useful for that.

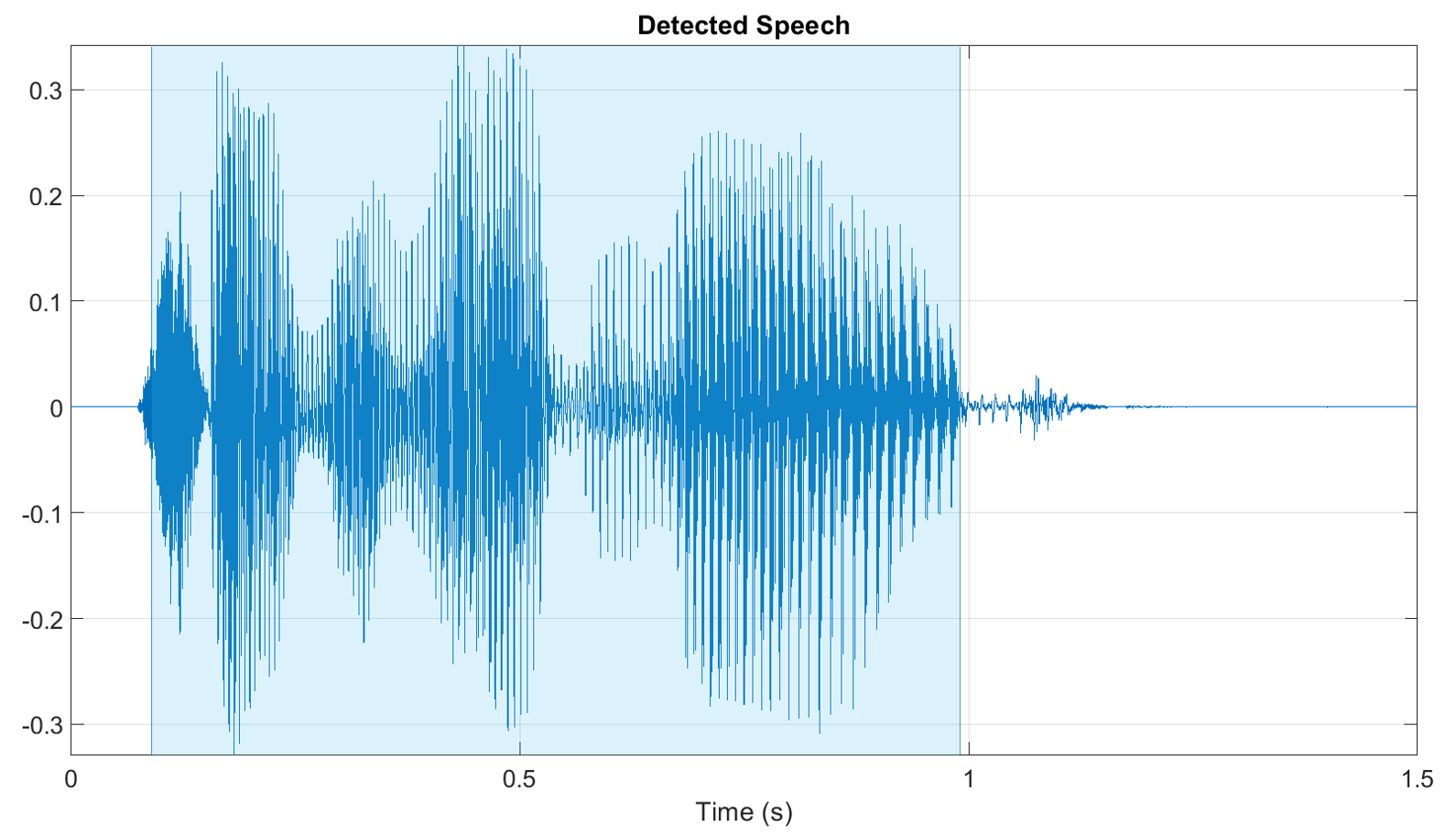

Another option is to use the

detectspeechnn function, which is available since 23a—but it also requires Deep Learning Toolbox since it uses a deep learning model under-the-hood. On a sample of the same sentence

you used, it performed well. It also has a number of parameters to give the type of control you’re looking for (e.g. ActivationThreshold, DeactivationThreshold).

Feel free to reach out directly to me if you want to discuss further/I can be of any help.

Best,

Brian Hemmat (Software Developer for Audio Toolbox at MathWorks).

From: AUDITORY - Research in Auditory Perception <AUDITORY@xxxxxxxxxxxxxxx>

On Behalf Of Mertes, Ian Benjamin

Sent: Thursday, March 21, 2024 3:51 PM

To: AUDITORY@xxxxxxxxxxxxxxx

Subject: [AUDITORY] Assistance with MATLAB 'detectSpeech' function

Hello all,

I am using Matlab R2023b and the Audio Toolbox. I would like to use the 'detectSpeech.m' function to find the boundaries of speech for a word recognition task.

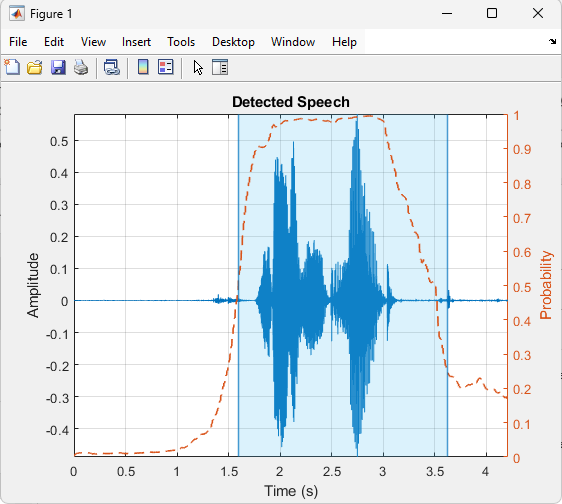

I'm having difficulty getting the function to correctly capture the boundaries. Below is an example figure using the sentence "Say the word laud." The blue shaded area is the detected region of

speech. Note that it does not correctly detect the onset and offset of the sentence. The figure was generated using the default values of the function. I also tried manipulating the window duration, percent overlap, and merge duration but I was unable to improve

the detection.

Any recommendations you may have would be greatly appreciated. Thank you!

Best,

Ian

—

Ian Mertes, PhD, AuD, CCC-A

Assistant Professor

Dept. of Speech and Hearing Science

University of Illinois Urbana-Champaign

208 Speech and Hearing Science Building

901 S. Sixth St. | M/C 482 | Champaign, IL 61820

217.300.4756 | imertes@xxxxxxxxxxxx

Dept. website: shs.illinois.edu | Lab website: hrl.shs.illinois.edu

Under the Illinois Freedom of Information Act any written communication to or from university employees regarding university business is a public record and may be subject to public disclosure.